Odroid HC1 based swarm cluster in a 19″ rack

Since several months now, I’m running my applications on a home made cluster of 4 Odroid HC1 running docker containers orchestrated by Swarm. Of course all HC1 are powered by my homemade powersupply.

I chose HC1 and not MC1 because of SSD support. Running system on a SSD is a lot faster than on a microSD.

Below is the story of this build…

4 Odroid HC1 in a 3d printed 19″ rack

I made a custom fan cooled 19″ rack mount support for the odroid.

The 3d print design is available on thingiverse

Initial mount:

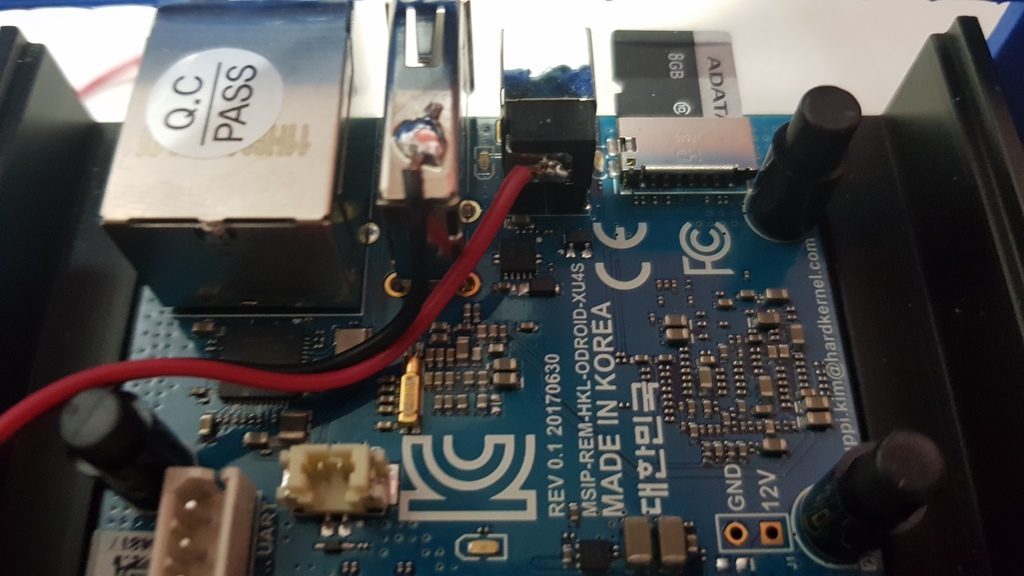

I soldered dupond cables to power the fan directly from the 5V input of each HC1:

Results with all fan mounted:

Final result in the rack:

Base install

Archlinux

Well, nothing special here, just follow the official arch doc for each HC1:

https://archlinuxarm.org/platforms/armv7/samsung/odroid-xu4

Saltstack

As I use saltstack to “templatize” all my servers, I installed saltstack master and minion (the NAS will be the master for all others servers). I already documented this here

From the salt master, a simple check show that all node are under control:

|

1 2 3 4 5 6 7 8 9 10 |

salt -E "node[1-4].local.lan" cmd.run 'cat /etc/hostname' node4.local.lan: node4 node3.local.lan: node3 node2.local.lan: node2 node1.local.lan: node1 |

SSD as root FS

Partition ssd :

|

1 |

salt -E "node[1-4].local.lan" cmd.run 'echo -e "o\nn\np\n1\n\n\n\nw\nq\n" | fdisk /dev/sda' |

Format the futur root partition:

|

1 |

salt -E "node[1-4].local.lan" cmd.run 'mkfs.ext4 -L ROOT /dev/sda1' |

Mount ssd root partition:

|

1 |

salt -E "node[1-4].local.lan" cmd.run 'mount /dev/sda1 /mnt/' |

Clone sdcard to ssd root partition:

|

1 |

salt -E "node[1-4].local.lan" cmd.run 'cd /;tar -c --one-file-system -f - . | (cd /mnt/; tar -xvf -)' |

Change boot parameters so root is /dev/sda1:

|

1 |

salt -E "node[1-4].local.lan" cmd.run 'sed -i -e "s/root\=PARTUUID=\${uuid}/root=\/dev\/sda1/" /boot/boot.txt' |

Recompile boot config:

|

1 2 |

salt -E "node[1-4].local.lan" cmd.run 'pacman -S --noconfirm uboot-tools' salt -E "node[1-4].local.lan" cmd.run 'cd /boot; ./mkscr' |

Reboot:

|

1 |

salt -E "node[1-4].local.lan" cmd.run 'reboot' |

Remove all from sdcard, and put /boot files at root of it:

|

1 2 3 4 |

salt -E "node[1-4].local.lan" cmd.run 'mount /dev/mmcblk0p1 /mnt' salt -E "node[1-4].local.lan" cmd.run 'cp -R /mnt/boot/* /boot/' salt -E "node[1-4].local.lan" cmd.run 'rm -Rf /mnt/*' salt -E "node[1-4].local.lan" cmd.run 'mv /boot/* /mnt/' |

Adapt boot.txt because boot files are in root of boot partition and no more in /boot directory:

|

1 2 3 4 5 |

salt -E "node[1-4].local.lan" cmd.run 'sed -i -e "s/\/boot\//\//" /mnt/boot.txt' salt -E "node[1-4].local.lan" cmd.run 'pacman -S --noconfirm uboot-tools' salt -E "node[1-4].local.lan" cmd.run 'cd /mnt/; ./mkscr' salt -E "node[1-4].local.lan" cmd.run 'cd /; umount /mnt' salt -E "node[1-4].local.lan" cmd.run 'reboot' |

Check that /dev/sda is root :

|

1 2 3 4 5 6 7 8 9 10 |

salt -E "node[1-4].local.lan" cmd.run 'df -h | grep sda' node4.local.lan: /dev/sda1 118G 1.2G 117G 0% / node3.local.lan: /dev/sda1 118G 1.2G 117G 0% / node1.local.lan: /dev/sda1 118G 1.2G 117G 0% / node2.local.lan: /dev/sda1 118G 1.2G 117G 0% / |

SSD Benchmark

Benchmarking is complex and I’m not going to say that I did perfectly right, but at least it gives an idea of how fast an SSD can be on HC1.

The SSD I connected to each of my HC1 is a Sandisk X400 128Gb.

I Launched the following test 3 times.

hdparm -tT /dev/sda => 362.6 Mb/s

dd write 4k => 122 Mb/s

|

1 |

sync; dd if=/dev/zero of=/benchfile bs=4k count=1048476; sync |

dd write 1m => 119 Mb/s

|

1 |

sync; dd if=/dev/zero of=/benchfile bs=1M count=4096; sync |

dd read 4k => 307 Mb/s

|

1 2 |

echo 3 > /proc/sys/vm/drop_caches dd if=/benchfile of=/dev/null bs=4k count=1048476 |

dd read 1m => 357 Mb/s

|

1 2 |

echo 3 > /proc/sys/vm/drop_caches dd if=/benchfile of=/dev/null bs=1M count=4096 |

I tried the same test with with IRQ affinity to big cores, but it did not shown any significant impact on performance.

Finalize installation

I’m not going to copy paste all my salstack states and templates here, as it obviously depends on personal needs and tastes.

Basicaly, my “HC1 Node” template does the following on each node:

- Change mirrorlist

- Install custom sysadminscripts

- Remove alarmuser

- Add some sysadmin tools (lsof, wget, etc.)

- Change mmc and ssd scheduler to deadline

- Add my user

- Install cron

- Configure log rotate

- Set journald config (RuntimeMaxUse=50M and Storage=volatile to lesser flash storage writes)

- Add mail ability (ssmtp)

Then changing password for my user using saltstack :

|

1 2 |

salt "node1.local.lan" shadow.gen_password 'xxxxxx' # give password hash in return salt "node2.local.lan" shadow.set_password myuser 'the_hash_here' |

Finaly, to ensure that not disk corruption would stop a node from booting, I forced fsck at boot time on all nodes by :

- adding “fsck.mode=force” in kernel line in /boot/boot.txt

- compile it with mkscr

- rebooting

Docker Swarm deploy

Swarm module in my saltstack seems not recognized despite I used the version 2018.3.1. So I ended up with executing commands directly, which is not really a problem as I’m not going to add a node everyday…

build the master:

|

1 |

salt "node1.local.lan" cmd.run 'docker swarm init' |

add worker:

|

1 |

salt "node4.local.lan" cmd.run 'docker swarm join --token xxxxx node1.local.lan:2377' |

add the 2nd and 3rd master for a failover ability:

|

1 2 3 |

salt "node1.local.lan" cmd.run 'docker swarm join-token manager' salt "node3.local.lan" cmd.run 'docker swarm join --token xxxxx 192.168.1.1:2377' salt "node2.local.lan" cmd.run 'docker swarm join --token xxxxx 192.168.1.1:2377' |

Checking all nodes status with “docker node ls” now display one leader and 2 nodes “reachable”

Then, I deployed a custom docker daemon configuration (daemon.json) to switch storage driver to overlay2 (the default one is to slow on xu4) and allows the usage of my custom docker registry.

|

1 2 3 4 |

{ "insecure-registries":["myregistry.local.lan:5000"], "storage-driver": "overlay2" } |

Docker images for the swarm cluster

The concept

As of now, using a container orchestrator implies to either use stateless containers or to use a global storage solution. I first tried to use glusterfs on all nodes. It was working perfectly but way to slow (between 25 and 36 Mb/s even with optimized settings and irq affinity to big cores).

I ended up with a simple but yet very efficient solution for my needs :

- An automated daily backup of all volumes on all nodes (to a network drive)

- An automated daily mysql database backup on all nodes (run only when mysql is detected)

- Containers that are able to restore their volumes from the backup during first startup

- An automated daily clean-up of containers and volumes on all nodes:

Thus, each time a node is shut down or a stack restarted, each container is able to start on any nodes retrieving its data automatically (if not stateless).

Daily backup script (extract):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# monthly saved backup firstdayofthemonth=`date '+%d'` if [ $firstdayofthemonth == 01 ] ; then BACKUP_DIR="$BACKUP_DIR/monthly" else firstdayoftheweek=$(date +"%u") if [ day == 1 ]; then BACKUP_DIR="$BACKUP_DIR/weekly" fi fi volumeList=$(ls /var/lib/docker/volumes | grep $DOCKER_VOLUME_LIST_PATTERN) for volume in $volumeList do archiveName=$(echo $volume | cut -d_ -f2-) mv "$BACKUP_DIR/$archiveName.tar.gz" "$BACKUP_DIR/$archiveName.tar.gz.old" cd /var/lib/docker/volumes/$volume/_data/ tar -czf $BACKUP_DIR/$archiveName.tar.gz * 2>&1 rm "$BACKUP_DIR/$archiveName.tar.gz.old" done |

Daily Clean-up script:

|

1 2 3 4 5 6 7 8 9 |

# remove unused containers and images docker system prune -a -f # remove unused volumes volumeToRemove=$(docker volume ls -qf dangling=true) if [ ! -z "$volumeToRemove" ]; then docker volume rm $volumeToRemove fi |

Custom Docker images

All my DockerFiles are documented and available on github :

https://github.com/jit06/docker-images

Simple distributed image build

To make a simple distributed build system, I made some scripts to distribute my docker containers build across the 4 Odroid HC1.

All containers are then put in a local registry, tagged with the current date.

Local image builder that build, tag and put in registry (script name : docker_build_image) :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

if [ $# -lt 3 ]; then echo "Usage: $0 <image name> <arch> <registry>" echo "Example : $0 myImage armv7h myregistry.local.lan:5000" echo "" exit 0 fi arch="$2" imageName="$arch/$1" registry="$3" tag=`date +%Y%m%d` docker build --rm -t $registry/$imageName:$tag -t $registry/$imageName:latest . docker push $registry/$imageName docker rmi -f $registry/$imageName:$tag docker rmi -f $registry/$imageName:latest |

Build several images given in argument (script name:docker_build_batch) :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

# usage : default build all if [[ "$1" == "-h" ]]; then echo "Usage: $0 [image folder 1] [image folder 2] ..." echo "Example :" echo " build two images : $0 mariadb mosquitto" echo "" exit 0 fi # if any parameter, use it/them as docker image to build if [[ $# -gt 0 ]]; then DOCKER_IMAGES_DIR="${@:1}" else echo "Nothing to build. try -h for help" fi echo -e "\e[1m--- going to build the following images :" echo -e "\e[1m$DOCKER_IMAGES_DIR\n" # build and send to repository for image in $DOCKER_IMAGES_DIR do echo -e "\e[1m--- start build of $image:" cd /home/docker/$image docker_build_image $image armv7h myregistry.local.lan:5000 done |

Distribute builds using saltstack on the salt-master, using previous script.

The special image “archlinux” is built first if found, because all other images depend on it.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 |

DOCKER_IMAGES_DIR="" SPECIAL_NAME="archlinux_image_builder" NODES[0]="" # usage : default build all if [[ "$1" == "-h" ]]; then echo "Usage: $0 [image folder 1] [image folder 2] ..." echo "Examples :" echo " build all found images : $0" echo " build two images : $0 mariadb archlinux_image_builder" echo "" exit 0 fi echo -e "\e[1m--- Update repository (git pull)\n" # update git repository cd /home/docker git pull # if any parameter, use it/them as docker image to build if [[ $# -gt 0 ]]; then DOCKER_IMAGES_DIR="${@:1}" else DOCKER_IMAGES_DIR=$(ls -d */ | cut -f1 -d'/') fi echo -e "\e[1m--- going to build the following images :" echo -e "\e[1m$DOCKER_IMAGES_DIR\n" # if archlinux images in array, build it first if [[ $DOCKER_IMAGES_DIR = *"$SPECIAL_NAME"* ]]; then echo -e "\e[1m--- found special image: $SPECIAL_NAME, start to build it first" echo -e "\e[1m--- update repository on node1\n" salt "hulk1.local.lan" cmd.run "cd /home/docker; git pull" echo -e "\e[1m--- build $SPECIAL_NAME image on hulk1\n" salt "hulk1.local.lan" cmd.run "cd /home/docker/$SPECIAL_NAME; ./mkimage-arch.sh armv7 registry.local.lan:5000" DOCKER_IMAGES_DIR=${DOCKER_IMAGES_DIR//$SPECIAL_NAME/} fi # update repository on all nodes echo -e "\e[1m--- update repository on node[1-4]\n" salt -E "node[1-4].local.lan" cmd.run "cd /home/docker; git pull" # Prepare build processes on known swarm nodes i=0 for image in $DOCKER_IMAGES_DIR do NODES[$i]="${NODES[$i]} $image" i=$((i + 1)) if [[ $i -gt 3 ]]; then i=0 fi done echo -e "\e[1m--- build plan :" echo -e "\e[1m--- node1 : ${NODES[0]}" echo -e "\e[1m--- node2 : ${NODES[1]}" echo -e "\e[1m--- node3 : ${NODES[2]}" echo -e "\e[1m--- node4 : ${NODES[3]}\n" # distribute and launch build plan salt "node1.local.lan" cmd.run "docker_build_batch ${NODES[0]}" salt "node2.local.lan" cmd.run "docker_build_batch ${NODES[1]}" salt "node3.local.lan" cmd.run "docker_build_batch ${NODES[2]}" salt "node4.local.lan" cmd.run "docker_build_batch ${NODES[3]}" echo -e "\e[1m--- build plan finished" |

Pingback: Home Cloud Setup with PXE, Proxmox, SaltStack & k3s – Minimum Effort Guide